This week’s “Question Of The Week” at my Education Week Teacher blog relates to how we can tell the difference between good and bad education research. As a supplement to next week’s response on that issue, I wanted to bring together some helpful resources that might be understandable to other teachers and me.

You might also be interested in these related “The Best…” lists:

The Best Places To Get Reliable, Valid, Accessible & Useful Education Data

The Best Posts & Articles To Learn About “Fundamental Attribution Error” & Schools

The Best Posts On The Study Suggesting That Bare Classroom Walls Are Best For Learning

Here are my choices for The Best Resources For Understanding How To Interpret Education Research:

A primer on navigating education claims by Paul Thomas.

Matthew Di Carlo at the Shanker Blog has written quite a few good posts on the topic:

In Research, What Does A “Significant Effect” Mean?

Revisiting The CREDO Charter School Analysis

Our Annual Testing Data Charade

The Education Reporter’s Dilemma

A Policymaker’s Primer on Education Research: How to Understand, Evaluate and Use It is from the Mid-continent Research for Education and Learning (McREL). Here’s a non-PDF version.

School Finance 101 often does great data analysis. Bruce Baker’s posts there, though, tend to be a little more challenging to the layperson, but it’s still definitely a must-visit blog.

Here’s a related post:

Hey, Researchers and Policymakers: Pay Attention to the Questions Teachers Ask is by Larry Cuban.

What Counts as a Big Effect? (I) is by Aaron Pallas.Thanks to Scott McLeod for the tip, who also wrote a related post.

Why “Evidence-Based” Education Fails is by Paul Thomas.

How to Judge if Research is Trustworthy is by Audrey Watters.

The “Journal of Errology” has a very funny post titled What it means when it says …. Here’s a sample:

“It has long been known” means “I didn’t look up the original reference”

“It is believed that” means “I think”

“It is generally believed that” means “A couple of others think so, too”

Value-Added Versus Observations, Part One: Reliability is from The Shanker Blog.

Understand Uncertainty in Program Effects is a report by Sarah Sparks over at Education Week.

Limitations of Education Studies is by Walt Gardner at Education Week.

More Evidence of Statistical Dodginess in Psychology? is from The Wall Street Journal.

How To Tell Good Science From Bad is by Daniel Willingham.

When You Hear Claims That Policies Are Working, Read The Fine Print is from The Shanker Blog.

Esoteric Formulas and Educational Research is from Walt Gardner at Education Week.

Effect Size Matters in Educational Research is by Robert Slavin.

Beware Of “Breakthrough” Education Research is by Paul Bruno.

Why Nobody Wins In The Education “Research Wars” is from The Shanker Blog.

Why it’s caveat emptor when it comes to some educational research is by Tom Bennett.

Six Ways to Separate Lies From Statistics is from Bloomberg News.

Thinking (& Writing) About Education Research & Policy Implications is from Bruce Baker.

Quote Of The Day: “When Can You Trust A Data Scientist”

How to read and understand a scientific paper: a guide for non-scientists is from “Violent Metaphors.”

Word Attack: “Objective” is by Sabrina Joy Stevens.

How people argue with research they don’t like is a useful diagram from The Washington Post.

Twenty tips for interpreting scientific claims is from Nature.

5 key things to know about meta-analysis is from Scientific American.

Understanding Educational Research is by Walt Gardner at Ed Week.

Evaluation: A Revolt Against The “Randomistas”? is by Alexander Russo.

What Is A Standard Deviation? is from The Shanker Blog. I’m adding it to the same list.

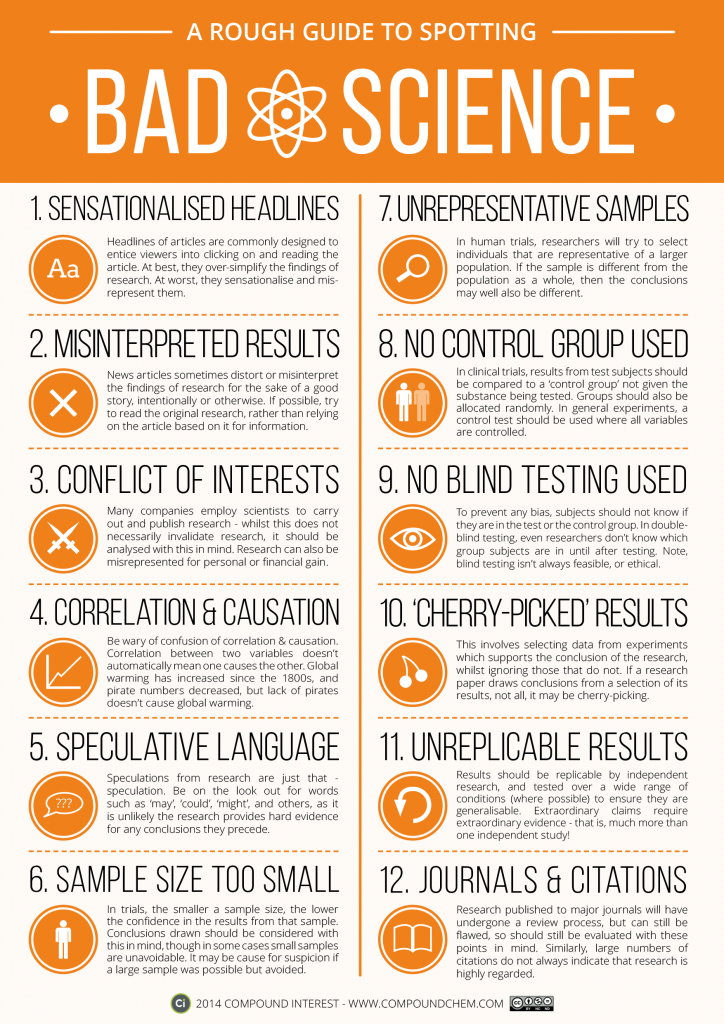

Thanks to Compound Interest

Here’s how much your high school grades predict your future salary is an article in The Washington Post about a recent study. It’s gotten quite a bit of media attention. How Well Do Teen Test Scores Predict Adult Income? is an article in the Pacific Standard that provides some cautions about reading too much into the study. It makes important points that are relevant to the interpretation of any kind of research.

How qualitative research contributes is by Daniel Willingham.

Why Statistically Significant Studies Aren’t Necessarily Significant is from Pacific Standard.

The Problem with Research Evidence in Education is from Hunting English.

The U.S. Department of Education has published a glossary of education research terms.

If the Research is Not Used, Does it Exist? is from The Teachers College Record.

How to Read Education Data Without Jumping to Conclusions is a good article in The Atlantic by Jessica Lahey & Tim Lahey.

Here’s an excerpt:

A Draft Bill of Research Rights for Educators is by Daniel Willingham.

Which Education Research Is Worth the Hype? is from The Education Writers Association.

This Is Interesting & Depressing: Only 13% Of Education Research Experiments Are Replicated

Valerie Strauss at The Washington Post picked up my original post on the lack of replication in education research (This Is Interesting & Depressing: Only .13% Of Education Research Experiments Are Replicated) and wrote a much more complete piece on it. She titled it A shocking statistic about the quality of education research.

Usable Knowledge: Connecting Research To Practice is a new site from The Harvard School of Education that looks promising.

When researchers lie, here are the words they use is from The Boston Globe.

Education researchers don’t check for errors — dearth of replication studies is from The Hechinger Report.

How to Tell If You Should Trust Your Statistical Models is from The Harvard Business Review.

What You Need To Know About Misleading Education Graphs, In Two Graphs is from The Shanker Blog.

The one chart you need to understand any health study is from Vox. I think it has implications for ed research.

Small K-12 Interventions Can Be Powerful is from Ed Week.

Trust, But Verify is by David C. Berliner and Gene V Glass and provides a good analysis of how to interpret education research. Here’s an excerpt:

Quote Of The Day: Great Metaphor On Standardized Tests

How can you tell if scientific evidence is strong or weak? is from Vox.

Frustrated with the pace of progress in education? Invest in better evidence is by Thomas Kane.

What Can Educators Learn From ‘Bunkum’ Research? is from Education Week.

The Difference Between “Evidence-Based” & “Evidence-Informed” Education

Two “Must Use” Resources From The UK On Education Research

Quote Of The Day: “Real-world learning is messy”

Making Sense of Education Research is from The Education Writers Association.

Useful Tweets On Ed Research From #rEDNY

The uses and abuses of evidence in education is not a research study, but a guide to evaluating research. It’s by Geoff Petty.

Education Studies Warrant Skepticism is by Walt Gardner.

Ten reasons for being skeptical about ‘ground-breaking’ educational research is from The Language Gym.

A Quick Guide to Spotting Graphics That Lie is from National Geographic (thanks to Bruce Baker for the tip).

A Trick For Higher SAT scores? Unfortunately no. is by Terry Burnham.

Ed research: The intractable problems http://t.co/Cy3kcjVlqb via @C_Hendrick is there any evidence that research literacy improves outcomes?

— José Picardo (@josepicardoSHS) June 11, 2015

Be skeptical. “. . .research relevant to education policy is always fraught with . . .problems of generalizability” https://t.co/DBXBDZ16XH

— Regie Routman (@regieroutman) June 2, 2015

How Not to Be Misled by Data is from The Wall Street Journal.

The Politics of Education Research & “What Works” Randomised Controlled Trials http://t.co/lLCKypKzt9

— Carl Hendrick (@C_Hendrick) June 24, 2015

Hattie: We need a barometer of what works best, it can establish guidelines as to what is excellent. #visiblelearning pic.twitter.com/t4ZYDsxmcZ

— Corwin Australia (@CorwinAU) July 9, 2015

This is a good video (and here’s a nice written summary of it by Pedro De Bruyckere ):

Fifty psychological and psychiatric terms to avoid: a list of inaccurate, misleading, misused, ambiguous, and logically confused words and phrases is from Frontiers In Psychology.

An Interesting “Take” On Research “Reproducibility”

How I Studied the Teaching of History Then and Now is by Larry Cuban.

Unexpected Honey Study Shows Woes of Nutrition Research is from The New York Times and has obvious connections to ed research.

Second Quote Of The Day: Economists Often Forget That “Context Matters”

Nearly all of our medical research is wrong is from Quartz, and can be related to education research.

A Refresher on Statistical Significance is from The Harvard Business Review.

FIVE SIMPLE STEPS TO READING POLICY RESEARCH is from The Great Lakes Center.

Do and don’ts for interpreting academic research via @tombennett71 @researchED1 @EducEndowFoundn in @tes pic.twitter.com/qq9YpdBH9q

— Stephen Logan (@Stephen_Logan) May 21, 2016

Digital Promise Puts Education Research All In One Place is from MindShift.

Anthony Byrk coins a new -at least, to me – term, “practice-based evidence,” in his piece, Accelerating How We Learn to Improve. Here’s how he describes it:

The choice of words practice-based evidence is deliberate. We aim to signal a key difference in the relationship between inquiry and improvement as compared to that typically assumed in the more commonly used expression evidence-based practice. Implicit in the latter is that evidence of efficacy exists somewhere outside of local practice and practitioners should simply implement these evidence-based practices. Improvement research, in contrast, is an ongoing, local learning activity.

This recognition of context was also raised in a different…context by Five Thirty Eight in an interesting article headlined Failure Is Moving Science Forward.

“What Works Clearinghouse” Unveils Newly Designed Website To Search For Ed Research

John Hattie’s Research Doesn’t Have to Be Complicated is by Peter DeWitt.

The importance of context by @dylanwiliam via @Andy__Buck #SLTchat #Honk #ukedchat pic.twitter.com/YI4mkgiTEc

— Stephen Logan (@Stephen_Logan) October 9, 2016

These next tweets from Daniel Willingham are on the same topic, and I’m adding them to the same list:

@benjaminjriley @mpershan I’ve argued basic science *can’t* dictate practice.

— Daniel Willingham (@DTWillingham) October 7, 2016

@mpershan @benjaminjriley …but I would be asking myself “how am I using this practice differently than it’s been tested?”

— Daniel Willingham (@DTWillingham) October 7, 2016

Mathematica Policy Research has released a simple twelve-page guide titled Understanding Types of Evidence: A Guide for Educators.

It’s specifically designed to help educators analyse claims made by ed tech companies but, as the report says itself, the guidance can be applied to any type of education research.

“Practitioners’ Instincts, Observations” Have Important Role In Research

The Unit of Education is from Learning Spy.

Wrong-Headed Criticism Of Medicaid Mirrors Wrong-Headed Criticism Of Schools

Research “Proves” – Very Little appeared in Ed Week.

The Cookie Crumbles: A Retracted Study Points to a Larger Truth is a NY Times article that has implications for education research.

…But It Was The Very Best Butter! How Tests Can Be Reliable, Valid, and Worthless is by Robert Slavin.

Excellent Post On Education Research By Dylan Wiliam

Three Questions to Guide Your Evaluation of Educational Research is from Ed Week.

What We Mean When We Say Evidence-Based Medicine is from The NY Times, and has some relevance to education research.

What does it mean when a study finds no effects? A guide for school and district leaders: https://t.co/92NXMQUlkt pic.twitter.com/yyhw3i6k1j

— IES Research (@IESResearch) June 4, 2017

Very brief introduction to types of research in education – what would you change? pic.twitter.com/KyFrRqX0sO

— Harry Fletcher-Wood (@HFletcherWood) January 26, 2017

The seven deadly sins of statistical misinterpretation, and how to avoid them is from The Conversation.

“Meta-analysis is inappropriate in education.” @dylanwiliam #wismath17 pic.twitter.com/3XdxSRojwv

— Dan Meyer (@ddmeyer) May 4, 2017

After I saw the above tweet, I asked for the source and received these replies:

See 2017 Wisconsin. https://t.co/575PeLsDvs

— Dan Meyer (@ddmeyer) May 4, 2017

Well, chapter 3 of my “Leadership for teacher learning” lays out the argument in detail…

— Dylan Wiliam (@dylanwiliam) May 4, 2017

Just to be clear I said that meta-analysis is inappropriate in education unless you have very similar interventions, which you usually don’t

— Dylan Wiliam (@dylanwiliam) May 9, 2017

What You Need To Know About Misleading Education Graphs, In Two Graphs is from The Shanker Institute.

When Real Life Exceeds Satire: Comments on ShankerBlog’s April Fools Post is from School Finance 101.

yeah I’m not a big fan of the weeks of learning conversion. My very, very rough, context-dependent rule of thumb for ed research effect sizes is

.01-.03 SDs = tiny

.04-.09 = small to modest

.1-.19 = moderate

.2 or greater = large— Matt Barnum (@matt_barnum) January 3, 2018

Empirical Benchmarks for Interpreting Effect Sizes in Research is a useful report.

“Ask A REL” Archives Are Some Of The Most Accessible Education Research Sites Around…

New Treasure Trove Of Education Research

Teachers Can Learn About Ed Research At The “Learning Zone”

The statements of science are not of what is true and what is not true, but statements of what is known with different degrees of certainty. pic.twitter.com/D5VeDaMzwh

— Richard Feynman (@ProfFeynman) January 13, 2018

Here’s a good review of the common “pitfalls” in education research.

Why the school spending graph Betsy DeVos is sharing doesn’t mean what she says it does by Matt Barnum.

Effect Sizes: How Big is Big? is by Robert Slavin,

We Can’t Graph Our Way Out Of The Research On Education Spending is from the Shanker Blog.

Effect Sizes and the 10-Foot Man is by Robert Slavin.

The magic of meta-analysis is from Evidence For Learning.

“For educational research to be more meaningful, researchers will have to acknowledge its limits.” https://t.co/7B62OPplsJ #eddata #K12

— EdSurge (@EdSurge) May 18, 2018

“It’s the Effect Size, Stupid. What effect size is and why it is important” by @ProfCoe

2002 piece, as useful now as then#research #EffectSizdhttps://t.co/QPgFTb6h8I— Eva Rimbau-Gilabert (@erimbau) May 17, 2018

The Effect Size Effect is from ROBIN_MACP.

Meta-analyses were supposed to end scientific debates. Often, they only cause more controversy is from Science Magazine.

What should we do about meta-analysis and effect size? is from the CEM Blog.

Congratulations. Your Study Went Nowhere. is from The NY Times.

The Problem with, “Show Me the Research” Thinking is by Rick Wormeli.

Teachers, administrators and school board members have to be critical consumers of research says @dylanwiliam at #rEDphil pic.twitter.com/dwRw4iVye8

— Nikki Able (@nikable) October 27, 2018

The Whys and Hows of Research and the Teaching of Reading is by Timothy Shanahan.

The practical meaning of studies that find ‘no effect’ is from The Mindset Scholars Network.

IMPORTANT REFLECTIONS ON EDUCATION RESEARCH

Maybe “Innovation” Isn’t The Holy Grail, After All

Researchers Show Parachutes Don’t Work, But There’s A Catch is from NPR.

Effect Sizes, Robust or Bogus? Reflections from my discussions with Hattie and Simpson is from Ollie Lovell.

Why you must beware of enormous effect sizes appeared in Schools Week.

How to interpret effect sizes in education is from Schools Week.

New #comic: If Literacy Twitter Were Real Life pic.twitter.com/BL48EDK6Bm

— Shawna Coppola (@ShawnaCoppola) February 25, 2019

The military wants to build a bullshit detector for social science studies is from Vox.

Interpreting Effect Sizes In Education Research is from The Shanker Institute.

Statisticians’ Call To Arms: Reject Significance And Embrace Uncertainty! is from NPR.

“How Much Should I Care?” Five questions policymakers and practitioners should ask when sizing up education research appeared in Medium.

The Fabulous 20%: Programs Proven Effective in Rigorous Research is by Robert Slavin.

I have a few quibbles, including this description of effect sizes (in my book, .3 is usually quite large). For more, see this great resource from @MatthewAKraft https://t.co/MvGI69HrKm pic.twitter.com/pEdWtrBpAs

— Matt Barnum (@matt_barnum) April 27, 2019

Ed researchers might want to keep this in mind, too https://t.co/b5cDcRuAz5

— Larry Ferlazzo (@Larryferlazzo) May 10, 2019

Hands down my favorite line of his speech. https://t.co/ldCczr8Ypn

— Erica L. Green (@EricaLG) May 10, 2019

QUOTE OF THE DAY ON THE ROLE OF RESEARCH IN EDUCATION

This analysis from @JohnFPane offers a real caution for journalists, researchers, and policymakers who describe effects sizes in terms of “years/days of learning” https://t.co/EKcOSo833K pic.twitter.com/cyzB4hqzH9

— Matt Barnum (@matt_barnum) June 12, 2019

Different researchers examining the same data to test the same hypothesis came to different conclusions.https://t.co/E1VDLT86Hi

— C. Kirabo Jackson (@KiraboJackson) June 7, 2019

Evidence For Revolution is from Robert Slavin.

Did One Program Really Cost Students 276 Years of Learning? (Spoiler: No.) is from Ed Week.

Correlation is Not Causation and Other Boring but Important Cautions for Interpreting Education Research is by Cara Jackson.

NEW GROWTH MINDSET STUDY, BUT THE CAVEATS ARE MORE INTERESTING THAN THE RESULTS

#AcademicTwitter, we want our research to matter, to get used. The reality is that it often does not & this is largely our own fault. Here is sage wisdom from @RuthLTurley about why research does not get used & what we can do about it. #RPP @RPP_Network @FarleyRipple @wtgrantfdn pic.twitter.com/tVaWwkCOrB

— Matthew A. Kraft (@MatthewAKraft) July 16, 2019

Beyond small, medium, or large: points of consideration when interpreting effect sizes is a new and useful study that’s not behind a paywall.

Some reflections on the role of evidence in improving education is from Dylan Wiliam. Here’s a tweeted excerpt:

Brilliant summary from @dylanwiliam: four questions stakeholders should ask themselves as they consider an educational intervention, from this article https://t.co/bRYaR6f0MG pic.twitter.com/txUWE95I5W

— Daniel Willingham (@DTWillingham) August 29, 2019

Effect Sizes, Robust or Bogus? Reflections from my discussions with Hattie and Simpson is from Ollie Lovell.

Who to Believe on Twitter is from Dan Willingham.

We Must Raise the Bar for Evidence in Education is from Ed Week.

Awareness of Education Research Methods is from the NEA.

When It Makes Sense to Experiment on Students — or “The Zone of RCTs” is by Cara Jackson.

How to overcome the education hype cycle is by David Yeager.

Essential caveats from @dylanwiliam for anyone pursuing the Holy Grail of evidence-informed certainty in education. Worth remembering that certainty is over rated and over sought #rEDPhil19 https://t.co/jbiJeyoFye

— Tom Bennett (@tombennett71) November 16, 2019

When exciting education results are in the news, be skeptical is from The Washington Post.

When incorporating research recommendations in practice, we need to know:

1. What is it good for?

2. When does it work?

3. How do we do it?

4. How much should we use it?

5. What else should we keep or drop? https://t.co/IvlIXSZJJt— Norma Ming (@mindmannered) December 21, 2019

REL Answered My Ed Research Question & They Can Answer Yours, Too

More research needed is from Adaptive Learning in ELT.

Compared to What? Getting Control Groups Right is by Robert Slavin.

Education’s Research Problem is by Daniel T. Willingham and Andrew J. Rotherham.

Responding to a Study You Just KNOW Is Wrong is by Daniel Willingham.

A Statistics Primer for the Overwhelmed Educator is from The Learning Scientists.

Analysis of What Works Clearinghouse studies finds effect sizes in evaluations conducted by a program’s developers are 80% larger than those done by independent evaluators (g = 0.31 vs 0.14) with ~66% of the difference attributable to publication bias: https://t.co/AXt7wkKn51 ($)

— Dylan Wiliam (@dylanwiliam) August 5, 2020

How, Exactly, Is Research Supposed to Improve Education? is by Dylan Wiliam.

This Dilbert comic can also b applied to some education researchers who recommend practices based on conditions unlikely 2 b found in many real life classrooms——- Is Doctor Of Impossible https://t.co/lVDlbftNZe

— Larry Ferlazzo (@Larryferlazzo) February 7, 2021

Is your classroom a ‘wicked’ learning environment? appeared in TES.

‘There’s a Lot of Potential Learning From Teachers Waiting to Happen’ is the headline of my Education Week column (guest-hosted by Cara Jackson).

Why Evidence-Backed Programs Might Fall Short in Your School (And What To Do About It) is from Ed Week.

Important Questions to Ask About Education Research appeared in Medium.

I think every single one of those 3.7 million, though, can be trained to ask their leaders this: ‘Can you show me the evidence justifying [insert year’s latest bullsh-initiative]?’

Even that degree of critical consumption would change things greatly.

— Eric Kalenze (@erickalenze) September 1, 2021

Making Sense of Education Research is from The Ed Writers Association.

It’s Time to Dump Deficit-Based Data is from Education Post.

Street Data: A Pathway Toward Equitable, Anti-Racist Schools is from Cult of Pedagogy.

Analysis: There’s Lots of Education Data Out There — and It Can Be Misleading. Here Are 6 Questions to Ask is from The 74.

EFFECT SIZES AND META-ANALYSES:HOW TO INTERPRET THE “EVIDENCE” IN EVIDENCE-BASED is from Retrieval Practice.

I’ve previously shared several good analyses of a recent well known study that was not very positive of Pre-K school. In defense of pre-K is clearly the best yet, and offers extremely important commentary on education research in general.

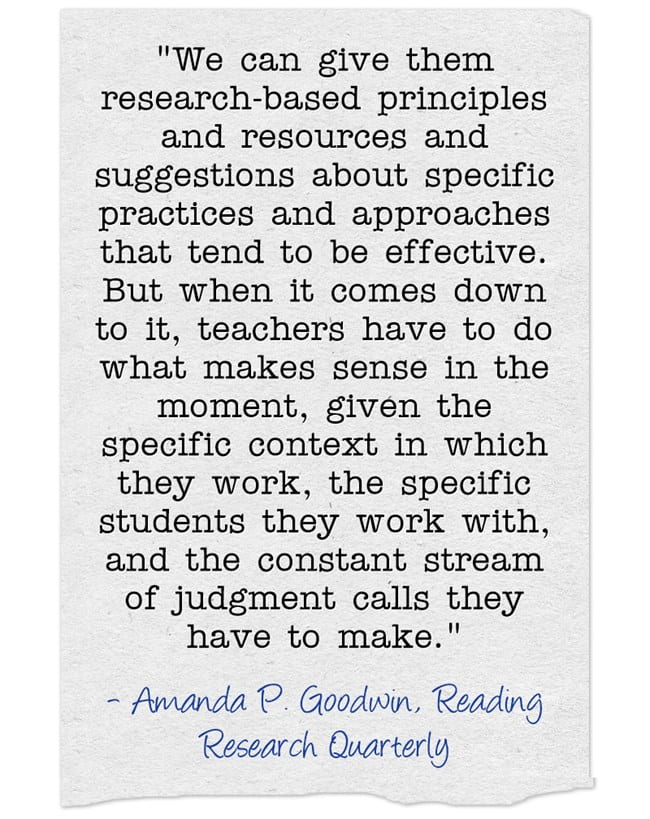

Taking stock of the science of reading: A conversation with Amanda Goodwin is from Phi Delta Kappan.

I’ve embedded an important quote from it below:

“Data analyzed using systematic methods to answer predefined questions or hypotheses generate research evidence.” – @lauren_supplee https://t.co/YpzhOBF7ih

Succinct summary the distinction between data & evidence, something I struggled to address here https://t.co/jQGcatWgct

— Cara Jackson (@cara__jackson) June 22, 2022

THE DANGERS OF MAKING SWEEPING CONCLUSIONS FROM RESEARCH WITHOUT ENOUGH CONTEXT

This is true. It’s also often pretty easy to anticipate these misrepresentations in advance, & to try as much as possible to write about findings in a way to make it as difficult as possible 2 misrepresent them. Misrepresenters r gonna misrepresent, but don’t make it easy 4 them https://t.co/dx5hBnyiUN

— Larry Ferlazzo (@Larryferlazzo) August 22, 2022

HELP ME UNDERSTAND WHY SOME IN EDUCATION SPEND SO MUCH TIME BEATING DEAD HORSES?

Additional suggestions are welcome.

“True scientific knowledge is held tentatively and is subject to change based on contrary evidence.”https://t.co/Tif2lY7Nu4

— Mark Anderson (@mandercorn) September 6, 2022

The Power of Liberatory Research is by Kimberly Parker.

Tes Explains “explains key teaching terminology and answers questions around the use of education research.”

Why you can’t (completely) trust the research is from TES.

Lethal mutations in education and how to prevent them is by Kate Jones and Dylan Wiliam.

Why Many Academic Interventions Don’t Have Staying Power—and What to Do About It is from Ed Week.

Yes. In fact I would argue that practices that are prescriptive about how teachers should teach cannot be evidenced based because in education the evidence is never clear and unambiguous enough to support prescription about how to teach.

— Dylan Wiliam (@dylanwiliam) March 18, 2023

The Promises and Limits of ‘Evidence-Based Practice’ is from Ed Week. I like what it says about how we should talk about “evidence-informed practice’ instead of evidence or research-based practice. It reminds me of The Best Resources Showing Why We Need To Be “Data-Informed” & Not “Data-Driven.”

Gert Biesta on Why “What Works” Won’t Work: “Education should be understood as a moral, non-causal practice, which means that professional judgements in education are ultimately value judgments, not simply technical judgments.”https://t.co/9D8XyQa29v

— Nick Covington (@CovingtonEDU) July 2, 2023

Abusing Research is by Alfie Kohn.

Can We Trust “Evidence-Based”? is from Peter Greene.

NEW STUDY RAISES IMPORTANT POINT – HOW HELPFUL IS ED RESEARCH?

What Does ‘Evidence-Based’ Mean? A Study Finds Wide Variation. is from Ed Week.

Using Research Evidence is from the Education Endowment Foundation.

WHAT IS THE DIFFERENCE BETWEEN CORRELATION AND CAUSATION? A TEACHER’S GUIDE is from InnerDrive.

Here’s a summary of the distinctions between traditional interviews and expert/elite interviews: pic.twitter.com/dCKRiCshLM

— Eos Trinidad (@eostrinidad) January 30, 2024

9 COGNITIVE BIASES TO LOOK OUT FOR WHEN ENGAGING WITH RESEARCH is from InnerDrive.

Schools are using research to try to improve children’s learning – but it’s not working is from The Conversation.

If you found this post useful, you might want to consider subscribing to this blog for free.

You might also want to explore the 800 other “The Best…” lists I’ve compiled.

Recent Comments